Night 02:00 AM

Day: Tuesday

A Call comes suddenly from one person.

" Sir I had a RSTP ring, I created a new traffic link on the same ring. I did this to ensure another service flow is to be created for a different customer. However just on creating the same link my existing service went down."

Prima facie, when a transport guy hears such a call then it seems very wierd to him. I mean how can a different traffic go down when you just create a seperate link on your topology. For a person who is dealing with transport and for a person who is actually doing transport operations, such a statement is wierd.

However, let us not forget something that Provider Bridge has some different traits from the conventional transport. Not that these are very very out of the world but then as I had discussed in my earlier blog post unlike the TDM where a Trail or a Cross connect is the service Element in the case of Provider Bridge the Trail or Cross connect in the TDM is NOT the service element, it is only the INFRASTRUCTURE. The actual service element is the VPN. So the isolation of services is not done by the means of trail but it is done by the means of SVLAN.

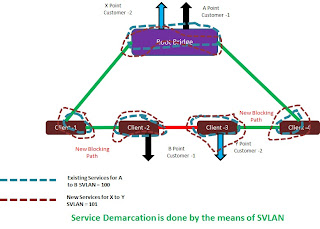

Now let us understand what this guy had done. So we go back to the figure that was there before. A service between A and B that is already created.

There is already a traffic flowing from from Point A to Point B which is located in Client No -2. Now there is one more customer that is taking handoff from Client No 3 and this is a different customer.

So what was the first step done by the guy of the transport.

Now just see what happens when he is doing this. He feels he will put this new set of customer on a seperate Link between the root and the client -3 forgetting that the RSTP topology is already converged and the service of customer -1 is already running in this topology. But he decides ignoring all these to create another trail in the topology and this is what happens to the RSTP after he creates the new link.

Now RSTP is bound by its inherent rules, for n number of paths to the root bridge there will be n-1 paths in the blocking state. So in this case the Blocking path is re-calculated. Now you have the Path between Client -1 and Client -2 as blocking and Client-3 and Client 4 as blocking. However the new link that is created from Root to Client -3 is in forwarding mode.

RSTP has done its job but what about the service? The VPNs were actually built round the ring and are still there. So for the traffic from Point A to Point B, where the VPNs are there they encounter two Blocking paths. This is shown in the figure below.

This is making the whole service go down for Service -1 and that is why my friend called me.

So is this a fault???? NO

Is this a configuration ERROR????? YES

So what should have been the Ideal way to configure the service number -2 in this case, without hampering any traffic?

To this we should again go to the previous posts where the service demarcation is done by the means of SVLAN.

As you can see in this figure you have diffent VPN for different Services in teh same topology and seperated by seperate SVLANs. The SVLAN or the service Vlan actually Seperates the services in a way that there is no Cross talk between different services. Of course they share the same infrastructure.

Each service can be Rate limited to its BW CAP by means of Policy and then both the VPN services can be delivered on teh same infrastructure. Like this multiple services can be delivered.

This results to a proper ring optimization and good control of the traffic.

But does that mean that you cannot have Multiple topologies in the RSTP configuration?

Is it always that RSTP should always be in the form of a RING?

The answer is NO...

RULE NO 5: YOU CAN SIMULATE MULTIPLE ALTERNATE LINES OF DELIVERY WITH THE RSTP HOWEVER CARE HAS TO BE TAKEN THAT THE SERVICE IS ALSO ACCORDINGLY MAPPED.

So then let us see how the service mapping of Service - 1 between A and B should have been in the topology when another Link is actually present between the Root and the Client -3 considering the same topology convergences that happened before.

If we see at this figure and consider the VPN creations for Service -1 which is between A and B the changes are seen at location Root and Client -3.

In the Root where earlier you had only two WAN ports added you are now adding all the three WAN Ports.

And in Client 3 instead of making a transit VPN with two WAN ports we are not making Transit VPN with 3 WAN ports.

This will result in double failure protection also as now we have two alternate paths as opposed to one, however the most important thing is that the service has to be in accordance.

Same rules and infrastructure applies also for Service from X to Y.

The main thing to remember is that RSTP is an algorithm that is dependant on the topology creation so if RSTP is to be chosen then the Services have to be traced out according to the topology.

It is just like the case that in a linear equation if you have two variables and only one reference equation then you will not be able to solve it. Hence you need to keep one part Variable and one part in reference to it.

In the event you are using RSTP

1. The RSTP algorithm takes care of the topology transitions in the case of failure. ( So this is the variable and varrying part in your network).

2. The service should include all the WAN ports that are part of the RSTP topology. ( This should be the constant part).

In any dynamic algorithm that relies on Topology transitions (OSPF/ERP/IS-IS/BGP) the service is always consisting of the fact that the routing has to have possibilities of all the WAN.

So is the case in RSTP.

My advice to my transmission Fraternity:

1. Once you make the service as per the topology then things are very perfect.

2. Need not create new topologies for new services, because you are actually doing an imbalance to the equation.

3. If you do need to provide alternate paths then make sure that the Ports are also mapped accordingly in the path.

HAVE A SUPERB WEEKEND AHEAD.........

Cheers....

Kalyan

Coming up next:

How does Switching take place in RSTP